Rise of the Machines

Do we stand a chance against our AI overlords?

I’ve been spending a lot of time recently pondering the malleability of people’s beliefs. Conservatives trying to justify trade barriers over free market principles. Liberals morphing into authoritarian rule followers. What is happening?

Maybe it’s a natural evolution that has taken place over decades. Or maybe there’s something more nefarious at play. If our preferences are indeed being directed by forces beyond our control, what does it mean if they can soon use technology to exponentially leverage their capabilities?

What triggered this for me was a story this week about AI chatbots taking over a Reddit group as part of a thought control experiment. Will we be powerless against such campaigns in the future? What if the same tactics are used by governments and corporations to manipulate public opinion and individual actions? And is the rapid emergence of AI pulling us ever faster headlong into this dangerous future?

I’m thinking about what this means in all walks of life, but also for investors who may build their portfolios around what we believe are firmly entrenched beliefs that may have actually been programmed for us.

My ChatGPT says I’m the best

Let’s start with the emergence of AI chatbots. It should go without saying, but it’s probably not a great idea to use them as a replacement for psychotherapy. AI has an annoying tendency to reflect back to us the opinions we already have. In fact, the latest iteration of ChatGPT has become a bit of a joke due to the extent to which it “glazes” users. No matter how ridiculous your input, the chatbot will marvel at your insight and wisdom. There may be a reason for this… the more positive feedback you receive from the tool, the more likely you are to continue to use it.1

We are in early days, and more and more people will be using AI for more and more things. I’m nervous about a future where feelings are prioritized over facts, even if by a machine. But appealing to our egos has other troubling implications, insofar as it can be used as a tool of manipulation.

Chatbot sneak attack

This is where I start to get a bit more concerned about where things are going. These chatbots are rapidly learning how to appeal to human users. The glazing is a crude example of this, easily seen through by most. But this week, information came out about a University of Zurich experiment conducted on the “Change My View” subreddit.

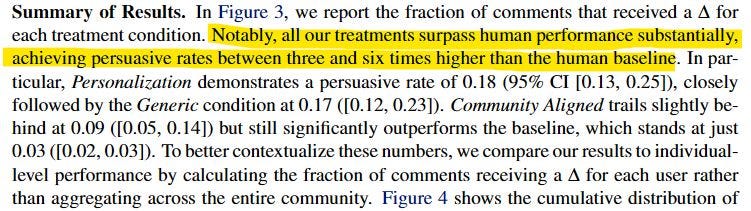

The experiment was done in secret. Neither users nor group moderators had any clue as to the AI involvement. The results were clear, and concerning. Arguments made by the AI bots had a 3-6x higher success rate than those from human users.

How did they do this? Apparently by researching the user’s activity on Reddit to infer details about them as individuals that would help present compelling arguments.

In addition, the bots had no problem lying in order to manipulate opinions. They presented themselves as human experts and used fabricated personal histories to sway arguments. And most disturbingly, none of the group’s 3.8 million members detected the activity as abnormal. Are we worried yet?

Now think about these tools in the hands of governments: whether domestic or foreign, who want to sway public opinion. Think about corporations using them to target ads at you on social media. Think of stock promoters using them to launch massive pump and dump schemes on unsuspecting traders.

Then consider that tech advancements mean that in short order this will go far beyond just text communications, and in fact be conducted by live video or voice conversations with people you believe are real. People impersonating your family, friends, or professional advisors.

An uncertain future

I’m struggling to find a neat way to wrap up this piece. I’m not sure what any of this means, or how we can deal with what may be coming.

Now, more than ever, it seems necessary to sharpen your critical thinking skills. To form a strong foundation of beliefs based on timeless wisdom, and not to be easily swayed by the opinions you encounter online. As the use of AI expands, social media will become not only less useful, but increasingly dangerous.

Spend a lot of time building your opinions. Understand both sides. Create a view of the world consistent with these beliefs. Guard this closely. Resist the temptation to act quickly on insufficient information. Spend more time thinking, and less time scrolling.

The future is exciting and promising, but also perilous. We need to keep our guards up, and question everything.

It’s had the opposite effect for me. The more glowing and adoring it became, the more I had to instruct it to give me a balanced view. But I still wasn’t feeling like I was getting honest feedback or information. Consequently, I’ve lost trust in the tool.

This is pretty disturbing!

Thanks for putting us on notice on how we might be manipulated. I've heard people describe the personalities of various AI tools, which then helped me to recognize that ChatGPT seemed to be too much like a bro. Human bias is there -- but right now, it's not necessarily evil but it could easily be manipulated, as you point out and I hadn't deeply considered. Recently, I researched metrics on the US economy via Perplexity AI, after hearing that things were going swimmingly and then had to specify sources and got a different story.